Vloggers face this problem all the time- you are looking at the camera and talking about anything, but while watching the computer screen you are startled to discover yourself from about 10-15 seconds ago! Well, this is called latency. In this post, we’ll elucidate on the concept of live streaming latency and provide a deeper understanding of how it works.

What is Live Streaming Latency?

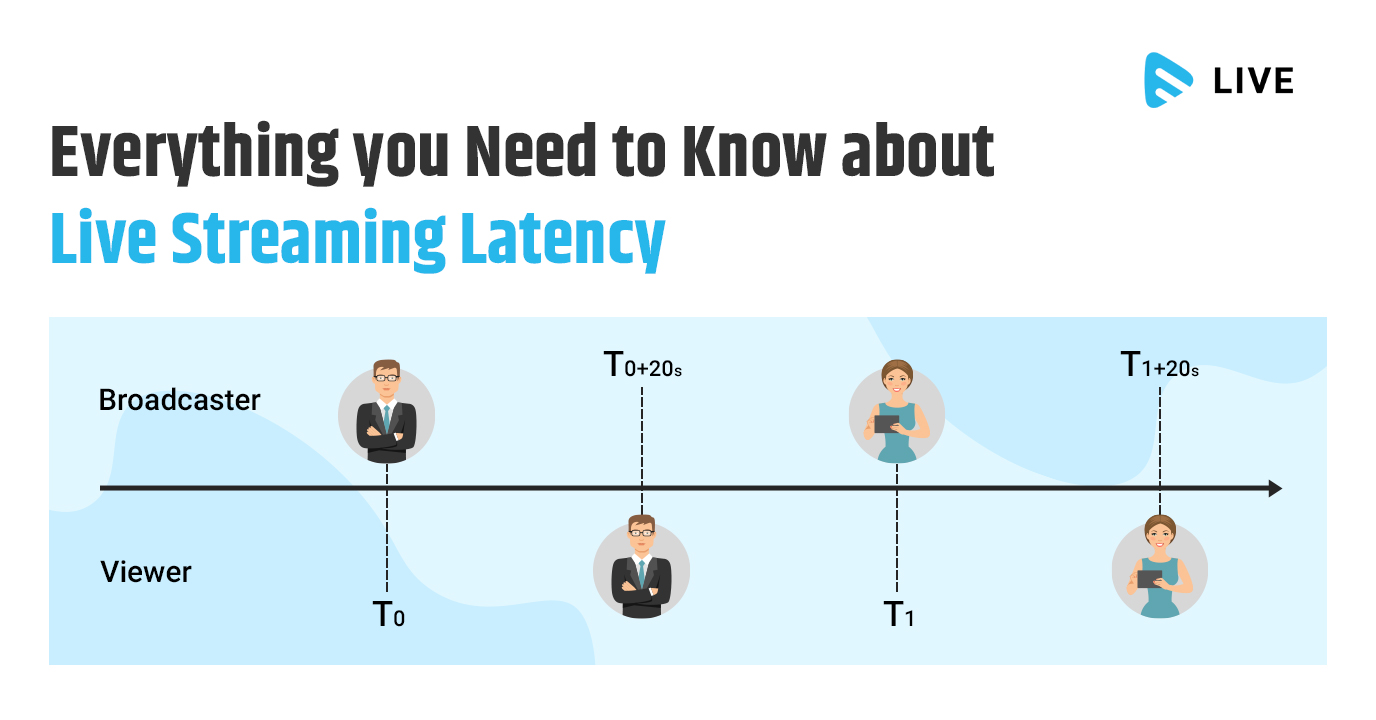

Latency generally means ‘delay’ or ‘lag’ in video streaming. Within a live streaming environment, latency is defined by measuring the time when something is recorded on camera in real life and when it actually gets reflected on the screen. For example, your friend waves at you in front of the camera and you watch it move on the screen, say, 10 seconds later. Therefore, latency is the time taken for an action to appear on screen from Point A (the camera) to Point B (the screen).

So, if you’re streaming live video, due to latency, your viewers will never be able to see you in real-time — there will always be a lag.

For more information about live streaming latency, read our whitepaper- Live Streaming Latency: A Glass to Glass Analysis

What Factors Cause Latency?

An end-to-end live streaming pipelineis complex, consisting of a range of components including encoding, transcoding, delivery, and playback. Each adds to a layer of latency. Depending on the different components and the architecture of the pipeline, latency can be impacted significantly. So, let’s look at the factors contributing to live streaming latency:

1. Image Capture: Taking a live image and turning it into digital signals takes some time, be it a single camera or a sophisticated video mixing system. For the record, it takes at least the duration of a single captured video frame (1/30th of a second for a 30fps frame rate). If you are using advanced video mixers, get ready to face additional latency for decoding, processing, re-encoding, and re-transmitting.

To know more about frame rates and video resolutions, read our blog SD, HD and 4K – Streaming Video Resolutions Explained

2. Encoding & packaging: The latency introduced is very sensitive to the kind of encoding or compression techniques that are being followed. There are several encoding compression standards, and therefore, the process needs to be optimized with as little delay as possible.

3. CDN: Delivering live video at scale requires most streaming service providers to leverage content delivery networks or CDNs. As a result, the video needs to propagate between different caches, introducing an additional layer of latency.

Also Read: Why is CDN Important for Live Streaming?

4. Last-mile delivery: Latency also depends on the end-user’s network connections, for example, whether they are using a cabled network at home, being connected to a wifi hotspot, or using their mobile connection. Also, if their geographical locations are far away to the closest CDN endpoint, additional latency could be experienced.

5. Video Player Buffer: Online video players need to buffer video to ensure a smooth playback experience. Often the sizes of buffers are defined in media specifications, but here you must note that more the size of the buffer, more the latency in playback.

6. Transmission to Viewers: There are two categories of protocols for viewing live video content and they differ in terms of latency and scalability.

They are- non-HTTP-based and HTTP-based.

Non-HTTP-based protocols include RTSP and RTMP. These protocols use a combination of TCP and UDP communications to send media to viewers. They can potentially be very low latency however, their support for adaptive streaming is below average.

HTTP-based protocols (such as HLS and MPEG-DASH, HDS) are designed to take advantage of standard web servers and content distribution networks. They work by breaking up the continuous media stream into segments that are 2–10 seconds long. HTTP-based protocols are better suited to live streaming due to better feature support, scalability and built-in support for adaptive bitrate streaming.

Minimum latency for non-HTTP-based protocols: About 5–10 milliseconds

Minimum latency for HTTP-based protocols: About 2 seconds

Also Read: Importance of Low Latency in Live Streaming

Wrapping up,

If you want streaming technology that provides you with fine-grained control over latency and video quality, you would have to choose a cloud video server. With Muvi Live, you can stream high-quality video with 10 seconds latency or less.

Sign up for a 14-day Free Trial to start delivering lightning-fast video streams!

Add your comment