In the dynamic and fast-revolving world of online streaming, staying ahead of the curve is pivotal for streaming businesses seeking to deliver high-quality content to their audiences. As technology continues to evolve at a rapid pace, mastering video streaming protocols is no longer the exclusive domain of tech-savvy individuals.

This blog is designed for streaming professionals and businesses who are passionate about providing top-notch streaming experiences but may not possess an extensive technical background.

In the following sections, we will unravel a comprehensive list of top video streaming protocols, offering valuable insights, practical knowledge, and a competitive edge in the ever-expanding streaming landscape.

Whether you’re a streaming expert or just embarking on your streaming journey, this resource will empower you with the knowledge you need to make informed decisions and enhance your viewers’ online streaming experiences. So, let’s get started!

What Is a Video Streaming Protocol?

A video streaming protocol is a fundamental component of the digital world, orchestrating the intricate dance between data and screens in the realm of online video. In a nutshell, it is a systematic set of rules and protocols governing how video data is transmitted from a source to a destination over the internet, ensuring the seamless and efficient delivery of video content to our screens.

Imagine streaming a movie or joining a live virtual event. Behind the scenes, a complex web of technologies and processes is at play. Video streaming protocols are the unsung heroes responsible for orchestrating this intricate symphony. They dictate the language that devices, servers, and networks must speak to ensure that video data arrives at its destination intact and ready for playback.

One of the key functions of these protocols is to package video data into manageable chunks, or “packets,” and transmit them over the internet. This fragmentation allows for efficient transmission and ensures that even in the face of network disruptions or varying bandwidth conditions, the video can still be delivered smoothly. These protocols also determine how video data is compressed, encoded, and decoded, optimizing the video for efficient streaming.

A critical aspect of video streaming protocols is their ability to adapt to changing network conditions in real-time. They continuously monitor the available bandwidth, latency, and other network parameters, adjusting the quality of the video stream to ensure uninterrupted playback. This dynamic adaptation is crucial in delivering a seamless viewing experience, as it prevents buffering and pixelation even in less-than-ideal network situations.

For those involved in the world of video streaming, understanding these protocols is paramount. Content creators, streaming platform developers, and viewers alike benefit from a basic grasp of how these rules govern the transmission of video content. From established standards like HTTP Live Streaming (HLS) and Dynamic Adaptive Streaming over HTTP (DASH) to emerging technologies such as WebRTC and SRT (Secure Reliable Transport), the video streaming protocol landscape is both diverse and dynamic.

In the forthcoming sections, we will delve deeper to give you a better idea.

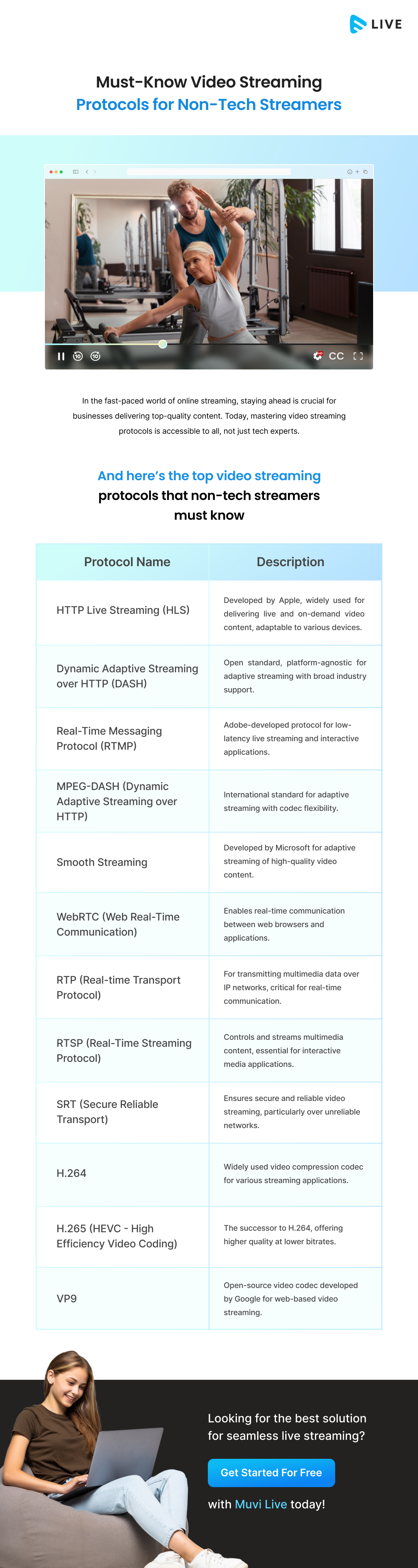

Top Video Streaming Protocols that a Non-Tech Streamer Must Know

1. HTTP Live Streaming (HLS)

HTTP Live Streaming (HLS) is a widely used video streaming protocol developed by Apple. It is designed to deliver live and on-demand video content to a wide range of devices, including smartphones, tablets, computers, and smart TVs. HLS works by breaking video content into small, manageable chunks or segments, which are then delivered over HTTP (Hypertext Transfer Protocol).

HLS is known for its adaptability and compatibility. It can adjust the quality of the video stream in real-time based on the viewer’s network conditions, ensuring a smooth and buffer-free viewing experience. This protocol has become the de facto standard for streaming on Apple devices and is supported by most modern web browsers.

HLS divides video content into short segments, typically lasting a few seconds each. These segments are available in multiple bitrates and resolutions. When a viewer requests a video stream, HLS’s adaptive streaming algorithm determines the viewer’s network conditions and device capabilities. It then selects the appropriate segment quality for uninterrupted playback. HLS also provides for encryption and content protection, making it suitable for secure streaming applications.

2. Dynamic Adaptive Streaming over HTTP (DASH)

Dynamic Adaptive Streaming over HTTP (DASH) is an open standard for streaming video content. Unlike HLS, which is closely associated with Apple, DASH is platform-agnostic and enjoys broad industry support. It operates on the same principle of dividing video content into segments but uses a more flexible and adaptable approach.

DASH’s platform-agnostic nature makes it a versatile choice for streaming content to a wide array of devices and browsers. It allows content providers to deliver high-quality video streams while adapting to changing network conditions seamlessly. DASH’s flexibility and wide support in the industry have contributed to its popularity.

DASH uses a manifest file, typically in XML or JSON format, to describe the available video segments and their characteristics. These segments are encoded in various bitrates and resolutions. When a viewer requests a video stream, DASH’s adaptive streaming algorithm selects the appropriate segment quality based on the viewer’s network conditions, device, and other factors. This dynamic adaptation ensures a continuous and optimal viewing experience.

3. Real-Time Messaging Protocol (RTMP)

The Real-Time Messaging Protocol (RTMP) is an older streaming protocol that was developed by Adobe. While its use has declined in recent years, it still plays a significant role in certain streaming scenarios. RTMP is primarily used for live streaming and interactive applications.

RTMP is important for its low latency streaming, making it suitable for real-time streaming applications like live gaming broadcasts and interactive webinars. It allows for bidirectional communication between the video streaming server and the viewer, enabling features like live chat and audience participation.

RTMP operates by establishing a persistent connection between the streaming server and the viewer’s client. This connection allows for low-latency data transfer and real-time interaction. RTMP streams are typically transmitted using the FLV (Flash Video) or H.264 video codec. While RTMP was widely used in the past, it has been gradually replaced by more modern protocols like HLS and DASH due to their broader compatibility and ease of use.

4. MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

Dynamic Adaptive Streaming over HTTP (DASH) is an international standard for adaptive streaming of multimedia content. It is a versatile and open-source protocol that allows content providers to deliver video streams efficiently and adaptively over the HTTP protocol.

MPEG-DASH’s significance lies in its adaptability and wide-ranging compatibility. It works across various platforms and devices, making it a preferred choice for content providers aiming to reach a diverse audience. It is codec-agnostic, allowing for flexibility in choosing video and audio codecs, and offers dynamic bitrate adaptation, ensuring smooth playback even in fluctuating network conditions.

MPEG-DASH operates by dividing video content into small segments, similar to other adaptive streaming protocols like HLS and DASH. These segments come in multiple bitrates and resolutions. When a viewer requests a video stream, DASH’s adaptive streaming algorithm selects the appropriate segment quality based on the viewer’s network conditions, device capabilities, and other factors.

One key feature of DASH is its use of Media Presentation Description (MPD) files. These files contain essential information about the available video segments and their characteristics. MPD files provide the player with the necessary information to request and render the video segments, making DASH a highly adaptable and efficient streaming protocol.

5. Smooth Streaming

Smooth Streaming is a streaming protocol developed by Microsoft as part of its Media Platform. Like DASH and HLS, Smooth Streaming is designed for adaptive streaming of multimedia content over HTTP.

Smooth Streaming gained prominence for its ability to deliver high-quality video streams with minimal buffering. It has been especially popular in the enterprise sector, where it is used for webcasts, webinars, and interactive video experiences. Its seamless adaptation to network conditions ensures a smooth and enjoyable viewing experience for users.

Smooth Streaming divides video content into small, individual chunks, similar to other adaptive streaming protocols. These chunks are encoded at different bitrates and resolutions, allowing for adaptive streaming. When a viewer requests a video stream, Smooth Streaming’s adaptive algorithm selects the appropriate chunk quality based on the viewer’s network conditions.

One unique feature of Smooth Streaming is its use of a server manifest file (usually in XML format) called the Smooth Streaming Presentation Manifest (SSPM). This manifest contains information about available video chunks, their quality levels, and timing. The client player uses this manifest to request and play the video chunks, ensuring a smooth and continuous viewing experience.

6. WebRTC (Web Real-Time Communication)

Web Real-Time Communication (WebRTC) is an open-source project that enables real-time communication between web browsers and applications. While not strictly a video streaming protocol, WebRTC has gained attention for its role in live streaming and interactive web applications.

WebRTC is essential for applications that require low-latency communication, such as video conferencing, live streaming, and online gaming. Its ability to establish direct peer-to-peer connections between devices without the need for plugins or third-party software has made it a valuable tool for building real-time, interactive web experiences.

WebRTC facilitates real-time communication by enabling direct audio and video communication between browsers or devices. It uses a combination of APIs and protocols to establish secure connections, including the Session Description Protocol (SDP) and the Interactive Connectivity Establishment (ICE) protocol.

In the context of live streaming, WebRTC allows content providers to transmit video and audio data directly to viewers’ browsers in real-time. This eliminates the need for intermediate servers and reduces latency, making it suitable for applications like live gaming broadcasts, webinars, and online auctions.

7. RTP (Real-time Transport Protocol)

Real-time Transport Protocol (RTP) is a network protocol primarily designed for transmitting multimedia data, including audio and video, over IP networks. RTP is often used in conjunction with another protocol called Real-time Control Protocol (RTCP), which helps monitor and manage the quality of the multimedia transmission.

RTP is a fundamental building block for real-time communication applications, such as video conferencing, live streaming, and VoIP (Voice over Internet Protocol). It provides the necessary framework for delivering time-sensitive data, ensuring that audio and video packets are delivered in the correct order and without significant delay.

RTP operates by breaking multimedia data into small packets, each with a sequence number and a timestamp to maintain synchronization. These packets are then transmitted over the network to their destination. RTP also allows for payload-specific information, indicating the type of media being transmitted, such as H.264 video or AAC audio.

While RTP itself does not address issues like packet loss or encryption, it serves as the foundation upon which other protocols, like RTCP and SRTP (Secure Real-time Transport Protocol), can build to enhance security and quality of service.

8. RTSP (Real-Time Streaming Protocol)

Real-Time Streaming Protocol (RTSP) is a network control protocol designed for controlling and streaming multimedia content. Unlike RTP, which primarily focuses on transporting media data, RTSP focuses on the control aspect of multimedia streaming, allowing clients to request and control media streams from servers.

RTSP is crucial for interactive multimedia applications that require user interaction with streaming media, such as seeking to a specific time in a video or pausing and resuming playback. It provides the means for clients to establish and control media sessions with servers, making it valuable for video-on-demand services, IP cameras, and live streaming applications.

RTSP operates as a client-server protocol, with the client initiating requests to the server. Clients send RTSP commands to the server, requesting media streams, controlling playback, and performing actions like pausing, seeking, or changing the stream’s quality.

One notable feature of RTSP is its ability to work in conjunction with other streaming protocols like RTP. RTSP can request a media stream from an RTP server and control its playback. This combination of protocols enables the delivery of interactive and customized multimedia experiences to viewers.

9. SRT (Secure Reliable Transport)

Secure Reliable Transport (SRT) is a relatively new and open-source video streaming protocol developed by Haivision. SRT is designed to address the challenges of transmitting high-quality video over unreliable networks, such as the public internet. It emphasizes security, reliability, and low latency.

SRT has gained rapid adoption in the broadcasting and live streaming industry due to its ability to deliver secure and high-quality video streams even in challenging network conditions. It is particularly valuable for remote contribution and distribution of live content, making it an attractive choice for broadcasters, content providers, and online streaming platforms.

SRT incorporates several key features to ensure secure and reliable video transmission. It uses end-to-end encryption to protect the content, making it suitable for transmitting sensitive information. SRT also employs dynamic packet recovery mechanisms to compensate for packet loss, ensuring smooth and uninterrupted video playback.

One of SRT’s standout features is its ability to adapt to varying network conditions in real-time. It dynamically adjusts the video bitrate and latency based on the available network bandwidth, ensuring optimal video quality without buffering or interruptions.

10. H.264

H.264, also known as Advanced Video Coding (AVC), is a widely adopted video compression codec developed by the ITU-T Video Coding Experts Group (VCEG) and the ISO/IEC Moving Picture Experts Group (MPEG). It is known for its efficient compression capabilities, making it one of the most commonly used codecs for video streaming, video conferencing, and digital television.

H.264’s significance lies in its ability to deliver high-quality video at lower bitrates, which translates to reduced bandwidth requirements and storage space. This efficiency makes it suitable for a wide range of applications, from online video streaming platform like YouTube and Netflix to video conferencing tools like Zoom and Skype.

H.264 employs a range of compression techniques, including inter-frame compression (motion compensation and disparity compensation) and intra-frame compression (discrete cosine transform and quantization).

These techniques help reduce redundancy in video data, allowing for significant compression while preserving visual quality. H.264 also supports multiple profiles, enabling a balance between compression efficiency and computational complexity to suit different use cases.

11. H.265 (HEVC – High-Efficiency Video Coding)

H.265, also known as High-Efficiency Video Coding (HEVC), represents the next step in video compression technology. It was jointly developed by the ITU-T VCEG and the ISO/IEC MPEG and serves as the successor to H.264. HEVC aims to deliver higher video quality at lower bitrates, making it a game-changer for high-definition and 4K video content.

HEVC is crucial in an era of increasing demand for high-resolution video content. It provides better compression efficiency than H.264, allowing for improved video quality at the same bitrate or reduced bitrate for the same quality. This makes it an ideal choice for ultra-high-definition video streaming, 4K TV broadcasting, and video surveillance systems.

HEVC introduces several advanced compression techniques, including larger block sizes, improved motion compensation, and more efficient intra-frame coding. These innovations reduce the amount of data needed to represent each frame accurately, resulting in superior compression performance. While HEVC’s computational complexity is higher than H.264, modern hardware and software solutions have enabled widespread adoption.

12. VP9

VP9 is an open-source video compression codec developed by Google. It is part of the WebM project and is designed to offer efficient compression for web-based video streaming and online content delivery. VP9 competes directly with H.264 and H.265 in the video compression landscape.

VP9 is significant for its role in advancing open-source video compression technology. It provides a compelling alternative to proprietary codecs like H.264 and HEVC, allowing content creators and streaming platforms to reduce bandwidth costs while maintaining video quality. VP9 is particularly valuable for web-based content, where efficiency and compatibility are key considerations.

VP9 employs a range of compression techniques, including improved intra-frame coding, variable block sizes, and entropy coding. It also offers support for scalability, enabling the delivery of adaptive streaming content to devices with varying display resolutions and bandwidth capabilities. VP9 is widely supported by web browsers, making it a popular choice for web-based video streaming.

13. AV1

AV1, or AOMedia Video 1, is an open-source video compression codec developed by the Alliance for Open Media (AOMedia), a consortium of technology companies, including Google, Netflix, Amazon, and Microsoft. It was introduced as a successor to VP9 and aims to provide exceptional video compression efficiency while remaining royalty-free and open-source.

AV1 holds significant importance in the ever-evolving landscape of video compression. Its primary focus is on delivering high video quality at lower bitrates, making it ideal for streaming high-definition and 4K video content over the internet. Being an open-source codec, it avoids the licensing fees associated with some proprietary codecs like H.264 and HEVC (H.265).

AV1 employs a variety of advanced compression techniques, including larger block sizes, improved motion compensation, and sophisticated entropy coding. These techniques help reduce redundancy in video data, resulting in superior compression efficiency. AV1 also supports features like scalable video coding, which enables adaptive streaming to devices with varying capabilities and network conditions.

14. MPEG-2

Moving Picture Experts Group-2 (MPEG-2) is a video compression standard developed by the MPEG group. It was introduced in the 1990s and played a pivotal role in the transition from analog to digital television. MPEG-2 is known for its versatility, as it supports a wide range of video resolutions and frame rates.

MPEG-2 is historically significant as it laid the groundwork for digital television broadcasting. It was the standard used for DVD video, digital TV broadcasts, and early digital video cameras. While newer codecs like H.264 and HEVC have largely superseded MPEG-2 in recent years, it still plays a role in legacy systems and equipment.

MPEG-2 uses a combination of inter-frame and intra-frame compression techniques to reduce video data. It divides video frames into blocks and applies discrete cosine transform (DCT) to compress them. The compression efficiency of MPEG-2 is not as high as modern codecs, but it was groundbreaking when introduced and served as a foundation for later video compression standards.

15. MPEG-4

MPEG-4 is a versatile video compression standard developed by the MPEG group. It was introduced as an evolution of MPEG-2 and brought significant improvements in video compression efficiency. MPEG-4 is known for its ability to handle various multimedia content, including video, audio, and interactive applications.

MPEG-4 is crucial for its adaptability and suitability for diverse multimedia applications. It introduced features like Advanced Video Coding (AVC), which significantly improved compression efficiency, making it ideal for digital video broadcasting, video conferencing, and multimedia streaming over the internet.

MPEG-4 uses a range of compression techniques, including motion compensation, discrete cosine transform (DCT), and entropy coding. One of its key features is its support for object-based coding, which allows the encoding of individual objects within a video scene. This flexibility enables interactive multimedia applications and is a distinguishing feature of MPEG-4.

16. WebM

WebM is an open, royalty-free multimedia container format developed by the WebM Project, a collaboration between Google, Mozilla, and many other organizations. It is designed specifically for web-based media content and is often used for HTML5 video playback.

WebM is significant for its open and royalty-free nature, making it a preferred choice for web developers, content creators, and web browsers. It provides a streamlined solution for delivering multimedia content on the internet while avoiding licensing fees associated with some proprietary formats.

WebM typically contains video streams compressed using the VP8 or VP9 video codecs and audio streams compressed with the Vorbis or Opus audio codecs. The use of open and efficient codecs contributes to WebM’s popularity for web video. It is compatible with most modern web browsers, making it a versatile choice for web-based multimedia content.

17. Ogg

Ogg is a multimedia container format developed by the Xiph.Org Foundation. It is designed to be open and free of patent restrictions, making it a suitable choice for open-source and free software projects. Ogg is often associated with the Vorbis audio codec and the Theora video codec.

Ogg is significant for its commitment to open standards and avoiding proprietary restrictions. It serves as a container for various multimedia content, making it suitable for audio and video playback, streaming, and recording. It provides an alternative to proprietary formats that require licensing fees.

Ogg can encapsulate audio and video streams encoded with a variety of codecs. While Vorbis and Theora are commonly associated with Ogg, it can also contain other codecs, including FLAC for lossless audio and Speex for speech compression. Ogg files are widely supported in open-source multimedia software and media players.

18. FLV (Flash Video)

Flash Video (FLV) is a multimedia container format developed by Adobe. It gained popularity as the preferred format for streaming video content on the web using Adobe Flash Player. FLV files are often associated with .flv file extensions.

FLV was historically important for its role in web-based video streaming, particularly during the era of Adobe Flash. It enabled the delivery of video content across various platforms and browsers, offering interactive features and customization options.

FLV typically contains video and audio streams encoded with various codecs, including H.264 for video and AAC for audio. FLV files are designed for efficient streaming, enabling the playback of video content while it is still being downloaded. While Adobe Flash Player has been largely phased out in favor of modern web technologies, FLV files may still be encountered in legacy content.

19. TS (Transport Stream)

Transport Stream (TS) is a communication protocol used for the transmission and storage of audio, video, and data. It is often employed in digital broadcasting, such as terrestrial, cable, and satellite television, as well as in the distribution of media files over the internet. TS is known for its robust error correction capabilities and adaptability to various transmission environments.

TS is vital for ensuring the reliable and efficient delivery of multimedia content, particularly in broadcasting scenarios where signal quality may vary. Its error correction mechanisms help mitigate issues caused by network or transmission errors, ensuring that viewers receive high-quality, uninterrupted media streams.

TS is based on packetization, where audio, video, and data are divided into small packets for transmission. Each packet contains a header with synchronization and error correction information, followed by payload data. This structure allows receivers to detect and correct errors during transmission, enhancing the overall reliability of the media transport process.

20. CMAF (Common Media Application Format)

Common Media Application Format (CMAF) is a standard developed by the Moving Picture Experts Group (MPEG) and the 3rd Generation Partnership Project (3GPP) for the delivery of multimedia content over the internet. CMAF aims to harmonize and standardize media container formats and delivery protocols to simplify streaming workflows and reduce complexity.

CMAF addresses the fragmentation and complexity of multimedia streaming by providing a unified format that can be used across various streaming platforms and devices. It offers benefits such as reduced storage and encoding costs, improved streaming latency, and broader compatibility.

CMAF employs a segmented structure, where media content is divided into smaller segments, each with its header and metadata. These segments can be stored and delivered independently, allowing for adaptive streaming and low-latency streaming scenarios. CMAF can support various codecs and streaming protocols, making it versatile and adaptable to different streaming environments.

21. BBR (Bufferbloat Reduction)

Bufferbloat Reduction (BBR) is a congestion control algorithm developed by Google for improving network performance. BBR is designed to address the issue of bufferbloat, which occurs when excessive buffering in network devices leads to increased latency and reduced quality of service.

BBR is crucial for optimizing network performance, particularly in scenarios where low latency and efficient use of available bandwidth are essential, such as real-time communication, online gaming, and multimedia streaming. It helps prevent the negative effects of bufferbloat, ensuring smoother and more responsive network experiences.

BBR dynamically adjusts the sending rate of data packets based on feedback from the network. It continuously monitors the round-trip time (RTT) and packet loss to determine the optimal sending rate. By controlling the sending rate, BBR helps prevent network congestion and bufferbloat, resulting in reduced latency and improved network quality.

22. QUIC (Quick UDP Internet Connections)

Quick UDP Internet Connections (QUIC) is a transport layer protocol developed by Google to enhance the efficiency and security of internet connections. QUIC is designed to overcome some of the limitations of traditional TCP (Transmission Control Protocol) and TLS (Transport Layer Security) by reducing latency and improving the reliability of data transmission.

QUIC is essential in an era where low latency and security are paramount for internet communication. It achieves this by combining the features of multiple protocols, including TCP, TLS, and HTTP/2, into a single streamlined protocol. QUIC has gained adoption in web browsers, online services, and content delivery networks, making web pages load faster and reducing video buffering times.

QUIC operates over the User Datagram Protocol (UDP) rather than TCP. This design choice allows for faster connections and reduced latency because it avoids some of the inherent overhead of TCP. QUIC also incorporates features like multiplexing, connection migration, and forward error correction to enhance performance and reliability. It encrypts data by default, improving security and privacy for internet users.

23. MJPEG (Motion JPEG)

Motion JPEG (MJPEG) is a video compression format that encodes video streams as a sequence of JPEG images. Unlike traditional video codecs, which use inter-frame compression, MJPEG compresses each frame independently. It has been used in various applications, including webcams, digital cameras, and video capture cards.

MJPEG is significant for its simplicity and suitability for certain applications. Since each frame is compressed individually, there is no need for complex encoding and decoding processes, making it relatively easy to implement and decode. It has found applications in scenarios where low-latency video capture and playback are essential.

In MJPEG, each video frame is compressed using the JPEG image compression standard. This means that each frame is a standalone image with no reference to previous or subsequent frames. While MJPEG offers high-quality video without inter-frame artifacts, it can be less efficient in terms of compression compared to modern video codecs like H.264 or H.265.

24. NDI (Network Device Interface)

Network Device Interface (NDI) is a technology developed by NewTek for video over IP (Internet Protocol). NDI enables the transmission of high-quality, low-latency video and audio signals over standard Ethernet networks. It has gained popularity in the broadcasting, live streaming, and video production industries.

NDI is essential for simplifying video production workflows and enabling real-time, high-quality video transport over existing network infrastructure. It eliminates the need for dedicated video cables and hardware, allowing for more flexible and cost-effective video production setups.

NDI works by encoding video and audio signals into IP packets, which can be transmitted over standard Ethernet networks. NDI-enabled devices can discover each other on the network and establish direct connections for video and audio transmission. NDI also supports features like low-latency streaming, multicast support, and high-quality video formats, making it suitable for a wide range of professional video applications.

25. UDP (User Datagram Protocol)

User Datagram Protocol (UDP) is one of the core transport layer protocols in the Internet Protocol (IP) suite. It provides a connectionless and lightweight means of data transmission. UDP is known for its simplicity and speed, making it ideal for applications where speed and minimal overhead are more critical than error checking and data integrity.

UDP is essential for scenarios where real-time or low-latency communication is paramount. It is often used for multimedia streaming, and online gaming. VoIP providers for businesses also rely on UDP to transmit voice calls efficiently over the Internet. UDP’s minimalistic approach allows for rapid data transfer without the overhead associated with more robust protocols like TCP.

UDP sends data in the form of datagrams, which are self-contained units of information. Each datagram includes the source and destination port numbers, a length field, a checksum for optional error checking, and the data payload. Unlike TCP, UDP does not establish a connection before data transmission and does not guarantee the order or delivery of data packets. This lightweight nature makes UDP suitable for time-sensitive applications.

26. TCP (Transmission Control Protocol)

Transmission Control Protocol (TCP) is another fundamental transport layer protocol in the IP suite. TCP provides a reliable, connection-oriented communication method that ensures data integrity, sequencing, and flow control. It is the backbone of many internet applications and services.

TCP’s reliability and error-checking mechanisms are crucial for applications where data accuracy and order are paramount. It is used extensively for web browsing, email, file transfer (FTP), and any scenario where data integrity is critical. TCP guarantees that data packets arrive at their destination without corruption and in the correct order.

TCP establishes a connection between the sender and receiver before data transmission begins. It uses a three-way handshake to set up the connection, ensuring both parties are ready to exchange data. Once the connection is established, TCP manages data transmission by segmenting it into packets, adding sequence numbers, and employing acknowledgments to confirm successful receipt. If any packet is lost or corrupted, TCP retransmits it, guaranteeing reliable delivery.

27. IGMP (Internet Group Management Protocol)

The Internet Group Management Protocol (IGMP) is a network-layer protocol used by hosts and adjacent routers to establish multicast group memberships. IGMP plays a crucial role in enabling multicast communication, where data is sent from one source to multiple recipients efficiently.

IGMP is essential for optimizing network bandwidth and enabling multicast applications such as IPTV, online gaming, and video conferencing. It ensures that data is only delivered to hosts that have expressed an interest in receiving it, reducing unnecessary network traffic.

IGMP operates at the network layer (Layer 3) of the OSI model. When a host or device wishes to join a multicast group, it sends an IGMP membership report to its local router. The router, in turn, uses IGMP to track group memberships and forward multicast traffic only to the relevant hosts. IGMP supports different versions, with IGMPv2 and IGMPv3 being widely used for modern multicast applications.

The Bottom Line

In the fiercely competitive landscape of live streaming platform, staying ahead of the curve and delivering exceptional viewer experiences is paramount. Technologies like P2P (Peer-to-Peer), Multicast Streaming, and HLS Low-Latency (HLS LL-HLS) are not just tools in the toolkit; they are game-changers that can define success in the live streaming market.

Understanding these technologies is crucial because they empower content creators and streaming platforms to overcome the challenges of scalability, latency, and bandwidth optimization. P2P offers a cost-effective and efficient way to distribute content, reducing the load on central servers. Multicast Streaming ensures that content reaches a vast audience simultaneously without congesting the network. HLS Low-Latency elevates the streaming experience by minimizing delays, fostering interactivity, and catering to real-time demands.

To strive and excel in the live streaming market, content providers, broadcasters, and streaming platforms must be well-versed in these technologies. Embracing P2P, Multicast Streaming, and HLS Low-Latency not only improves the quality and efficiency of content delivery but also allows for innovation in real-time engagement, interactive content, and immersive experiences.

In this ever-evolving landscape, being aware of and leveraging these technologies is not just a competitive advantage; it is an essential strategy for meeting the expectations of modern audiences and establishing a strong foothold in the dynamic world of live streaming. Those who master these tools will be better positioned to captivate viewers, optimize resources, and thrive in an increasingly crowded market.

If you are yet to start your live streaming journey, Muvi Live cam be the best fit. Not only it lets you live stream seamlessly but offers a range of competitive features like recurring live events, built-in CDN, live stream recording, live chat, and many more.

Start for free today to explore more.

Add your comment