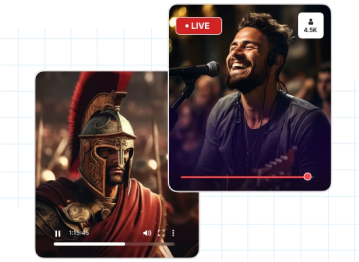

As audiences increasingly demand real-time interaction and immersive experiences, live video Software Development Kits (SDKs) emerge as essential tools for live streaming businesses. These SDKs offer a comprehensive suite of features and functionalities tailored to the unique needs of live streaming, enabling businesses to deliver seamless, high-quality live video experiences to their audiences.

From facilitating real-time communication to providing robust security measures and customizable branding options, a proper understanding of live video SDK empowers businesses to harness the full potential of live streaming. By leveraging these tools effectively, businesses can enhance user engagement, expand their reach, and ultimately drive growth and success in the competitive live streaming sector.

If you are wondering how and why, read on as we walk you through all you need to know about live video SDKs.

What are Live Video SDKs?

Live Video SDKs, or Software Development Kits, are comprehensive frameworks designed to empower developers with the necessary tools and resources to seamlessly integrate live video capabilities into various applications and services.

These SDKs encapsulate features for video capture, encoding, transmission, real-time communication, interactive elements, and audience engagement tools. They cater to diverse industries and applications, including live streaming platforms, video conferencing solutions, gaming experiences, and social networking applications.

Live Video SDKs often include robust security measures such as encryption, access controls, and authentication mechanisms to protect sensitive data and content during transmission.

A Complete Guide To Live Video SDKs in Live Streaming

Live Video Software Development Kits (SDKs) are essential components for integrating live streaming capabilities into applications and services. These SDKs provide developers with a comprehensive set of tools and resources to seamlessly incorporate live video functionalities. By leveraging Live Video SDKs, developers can facilitate video capture, encoding, transmission, real-time communication, interactive elements, and audience engagement features.

Selecting the appropriate Live Video SDK is crucial for ensuring the success and performance of a live streaming platform or application. When choosing an SDK, several factors should be considered:

- Compatibility: Ensure that the SDK is compatible with the target platform and technology stack.

- Scalability: Look for an SDK that can scale with the growing demands of the application and support increasing numbers of concurrent viewers.

- Features: Assess the features and functionalities offered by the SDK, such as video quality, latency, real-time communication, and audience engagement tools.

- Ease of Integration: Choose an SDK that is easy to integrate into existing applications and services, with comprehensive documentation and support resources.

- Security: Prioritize SDKs that offer robust security measures, including encryption, access controls, and authentication mechanisms, to protect sensitive data and content.

- Cost: Consider the pricing model of the SDK, including any licensing fees, subscription plans, or usage-based charges.

By carefully evaluating these factors, developers can select the right Live Video SDK to meet their specific requirements and objectives.

1. Features and Functionality Overview

Live Video SDKs offer a wide range of features and functionalities tailored to the needs of live streaming applications. Some of the key features include:

- Video Capture: Live Video SDKs enable developers to capture video from various sources, including webcams, cameras, and screen recordings.

- Encoding: These SDKs provide encoding capabilities to convert live video streams into formats suitable for transmission over the internet.

- Transmission: Live Video SDKs facilitate the transmission of live video streams to viewers’ devices, ensuring smooth and uninterrupted playback.

- Real-time Communication: Many SDKs support real-time communication features, such as chat, messaging, and voice/video calls, allowing for direct interaction between broadcasters and viewers.

- Interactive Elements: Live Video SDKs enable the integration of interactive elements, such as polls, quizzes, and live chat, to engage viewers and enhance the viewing experience.

- Audience Engagement Tools: These SDKs offer a variety of audience engagement tools, including audience analytics, viewer tracking, and audience segmentation, to help broadcasters understand their audience and tailor their content accordingly.

2. Integration and Compatibility Guidelines

Live video SDK integration begins with a thorough understanding of compatibility requirements and integration guidelines. This involves ensuring compatibility with the target platform, technology stack, and programming languages. Here’s an in-depth look at integration and compatibility considerations:

- Platform Compatibility: Live video SDKs should be compatible with the platform where they will be integrated, whether it’s a web application, mobile app, or desktop software. For example, SDKs should support iOS, Android, Windows, macOS, and various web browsers.

- Technology Stack Compatibility: Developers need to verify compatibility with the technology stack used in their application. This includes compatibility with web frameworks (e.g., React, Angular, Vue.js), mobile development platforms (e.g., Flutter, React Native), and backend technologies (e.g., Node.js, Django, Ruby on Rails).

- API Documentation and Support: Comprehensive API documentation and robust support channels are essential for successful integration. Developers rely on clear documentation and responsive support to troubleshoot issues and implement features effectively.

- Versioning and Updates: It’s crucial to stay informed about SDK version updates and changes. Developers must plan for version upgrades and manage dependencies to ensure compatibility with the latest features and improvements.

- Testing and Quality Assurance: Rigorous testing and quality assurance procedures are necessary to verify integration compatibility and functionality across different devices, browsers, and platforms. Emulators, simulators, and real-world testing scenarios help identify and address integration issues proactively.

3. Scalability and Performance Considerations

Scalability and performance are critical factors in live video streaming applications, especially when dealing with high concurrent viewer counts and fluctuating traffic volumes.

- Server Infrastructure: Scalable and redundant server infrastructure is essential to accommodate increasing viewer demand. Cloud-based services like AWS, Azure, and Google Cloud offer flexible scaling and resource allocation based on demand.

- Content Delivery Network (CDN): A robust CDN optimizes content delivery by distributing live video streams to viewers worldwide. Features like edge caching and network optimization reduce latency and improve performance.

- Load Balancing: Load balancing distributes incoming traffic evenly across multiple servers or instances to prevent overloads and ensure consistent performance. Dynamic scaling capabilities adapt to changing traffic patterns in real-time.

- Caching and Content Optimization: Content caching and optimization minimize latency and buffering by caching frequently accessed content and adapting content delivery based on network conditions and viewer devices.

- Monitoring and Analytics: Monitoring tools track key performance metrics like server health, network latency, bandwidth usage, and viewer engagement. Analytics provide insights to optimize resource utilization and improve scalability and performance.

4. Security and Encryption Protocols

Security and encryption are paramount in live video streaming to protect sensitive data, prevent unauthorized access, and ensure the privacy and integrity of video content.

- Transport Layer Security (TLS): TLS encryption secures data transmission between clients and servers, utilizing modern TLS protocols like TLS 1.2 and TLS 1.3 with strong encryption algorithms like AES and RSA.

- End-to-End Encryption (E2EE): E2EE protects video content from capture to playback, ensuring only authorized viewers can access and decrypt the content. Robust encryption algorithms and key management practices are essential for security.

- Access Controls and Authentication: Authentication protocols like OAuth and JWT verify user identities and enforce access policies. Multi-factor authentication (MFA) adds an extra layer of security.

- Digital Rights Management (DRM): DRM solutions protect copyrighted video content from piracy and unauthorized distribution. Encryption, license management, and content protection mechanisms control access to premium content.

- Security Auditing and Compliance: Regular security audits and compliance assessments identify vulnerabilities and ensure adherence to security best practices and regulatory requirements like GDPR and HIPAA. Security controls, logging mechanisms, and intrusion detection systems detect and respond to security threats.

5. Analytics and Monitoring Capabilities

Analytics and monitoring capabilities are essential components of any live video streaming platform, providing valuable insights into audience behavior, platform performance, and content effectiveness. These capabilities allow broadcasters to make data-driven decisions, optimize their content strategy, and enhance the viewer experience.

Analytics tools track various metrics, including:

- Viewer Engagement: Metrics such as viewer count, watch time, and engagement rate provide insights into audience engagement levels during live streams. This data helps broadcasters understand which content resonates most with their audience and identify opportunities for improvement.

- Audience Demographics: Analytics tools can capture demographic information such as age, gender, location, and device type of viewers. This data allows broadcasters to tailor their content to specific audience segments and optimize targeting strategies.

- Playback Quality: Monitoring tools track metrics related to playback quality, including buffering, latency, and video resolution. By monitoring these metrics, broadcasters can identify and address issues that may affect the viewing experience, such as network congestion or device compatibility issues.

- Content Performance: Analytics tools provide insights into the performance of individual pieces of content, including live streams, videos on demand, and interactive elements. This data helps broadcasters understand which content drives the most engagement and revenue, allowing them to prioritize content creation and distribution efforts accordingly.

- Real-time Monitoring: Real-time monitoring capabilities enable broadcasters to monitor key metrics and performance indicators in real-time, allowing them to respond quickly to issues and make adjustments as needed during live broadcasts.

6. Customization and Branding Options

Customization and branding options allow broadcasters to create a unique and immersive viewing experience that reflects their brand identity and values. These options help broadcasters differentiate themselves from competitors and build brand loyalty among viewers.

Some common customization and branding options include:

- Branded Player: Customizable video players allow broadcasters to incorporate their brand colors, logos, and visual elements into the player interface. This helps create a consistent brand experience across all video content and enhances brand recognition among viewers.

- Overlay Graphics: Overlay graphics and banners can be used to display promotional messages, call-to-action buttons, and other branded content during live streams. These graphics help reinforce brand messaging and drive viewer engagement.

- Custom Chat Experience: Customizable chat features allow broadcasters to customize the appearance and functionality of the chat interface, including font styles, colors, and moderation settings. This helps create a personalized and engaging chat experience for viewers while maintaining brand consistency.

- Interactive Elements: Interactive elements such as polls, quizzes, and live Q&A sessions can be branded with the broadcaster’s logo and colors to create a cohesive viewing experience. These interactive features help increase viewer engagement and foster a sense of community among viewers.

- Adaptive Branding: Adaptive branding options allow broadcasters to automatically adjust branding elements based on viewer demographics, device types, and viewing contexts. This ensures a consistent brand experience across different platforms and devices, regardless of the viewer’s location or device.

7. Future Trends and Innovations

The live video streaming industry is constantly evolving, driven by technological advancements, changing consumer preferences, and emerging trends. Some future trends and innovations to watch out for are:

- Augmented Reality (AR) Integration: AR technology allows broadcasters to overlay digital elements, graphics, and effects onto live video streams in real-time. This immersive experience enhances storytelling capabilities and provides new creative opportunities for content creators.

- Virtual Events and Experiences: Virtual events and experiences, such as virtual concerts, conferences, and trade shows, are becoming increasingly popular. These events allow viewers to participate and interact with content from anywhere in the world, opening up new revenue streams for broadcasters.

- Personalized Content Recommendations: AI-driven recommendation engines analyze viewer preferences, viewing history, and contextual data to provide personalized content recommendations. This helps viewers discover relevant content and increases engagement with the platform.

- Multi-platform Streaming: Multi-platform streaming solutions enable broadcasters to simultaneously stream live video content to multiple platforms and social media channels. This expands reach and audience engagement while streamlining content distribution efforts.

- Blockchain-based Monetization: Blockchain technology enables new monetization models for live video streaming, such as micropayments, tokenized rewards, and decentralized content ownership. This offers new revenue streams for content creators and enhances transparency in transactions.

8. SDK Performance Metrics Analysis

SDK performance metrics analysis is crucial for assessing the effectiveness and efficiency of software development kits (SDKs) used in live video streaming applications. By analyzing performance metrics, developers can identify bottlenecks, optimize resource utilization, and improve overall streaming performance. Here’s an in-depth look at some key performance metrics and their significance:

- Startup Time: Startup time refers to the time it takes for the SDK to initialize and begin streaming video content. A shorter startup time is desirable as it ensures a faster time-to-play for viewers, reducing buffering and improving user experience.

- Buffering Rate: Buffering rate measures the frequency and duration of buffering events during video playback. High buffering rates indicate poor network conditions or insufficient bandwidth, leading to interruptions in the streaming experience. Minimizing buffering is essential for delivering smooth and uninterrupted video playback.

- Playback Quality: Playback quality metrics include video resolution, frame rate, and visual artifacts such as pixelation or stuttering. Analyzing playback quality metrics helps ensure that video content is delivered in the highest quality possible, optimizing viewer satisfaction and engagement.

- Resource Consumption: Resource consumption metrics, such as CPU usage, memory utilization, and network bandwidth, provide insights into the SDK’s resource requirements. Optimizing resource consumption ensures efficient use of system resources and prevents performance degradation on the viewer’s device.

- Error Rates: Error rates measure the frequency of errors encountered during video streaming, such as network errors, codec errors, or playback failures. Analyzing error rates helps identify potential issues and troubleshoot problems to ensure seamless streaming experiences.

- Adaptability: Adaptability metrics assess the SDK’s ability to adapt to changing network conditions, device capabilities, and viewer preferences. An adaptive SDK can dynamically adjust video bitrate, resolution, and encoding parameters to optimize streaming performance and maintain playback quality.

9. Adaptive Bitrate Streaming Techniques

Adaptive bitrate streaming (ABR) is a technique used to optimize video delivery by dynamically adjusting video bitrate and resolution based on network conditions and device capabilities. ABR improves streaming quality and reliability by adapting to changing bandwidth availability and network congestion. Here are some key adaptive bitrate streaming techniques:

- Segmented Encoding: Video content is encoded into multiple bitrate/resolution profiles and divided into segments of fixed duration. During playback, the client device selects the appropriate segment based on available bandwidth and device capabilities.

- Bitrate Switching Algorithms: Bitrate switching algorithms monitor network conditions and viewer buffer status to determine when to switch between different bitrate/resolution profiles. Common algorithms include rate-based (e.g., BOLA, RMP) and buffer-based (e.g., BBA, L2A) approaches.

- Manifest Files: A manifest file (e.g., HLS, MPEG-DASH) provides metadata about available video segments and their corresponding bitrate/resolution profiles. The client device uses the manifest file to request and download segments dynamically.

- Buffer Management: The client device maintains a buffer of video segments to smooth out variations in network bandwidth and prevent buffering interruptions. Buffer management techniques optimize buffer size and playback latency to minimize buffering and improve streaming quality.

- Content Pre-fetching: Content pre-fetching techniques anticipate future segment requests and proactively download segments in advance to reduce latency and improve playback continuity. Pre-fetching can be based on predictive algorithms or user behavior patterns.

10. Low-Latency Protocol Implementation

Low-latency protocol implementation is essential for minimizing latency and ensuring real-time communication in live video streaming applications. Low-latency protocols reduce the time it takes for video packets to travel from the sender to the receiver, resulting in faster response times and improved interactivity. Here are some key low-latency protocol implementation techniques:

- UDP-Based Protocols: UDP-based protocols (e.g., WebRTC, SRT) prioritize speed and efficiency over reliability, making them ideal for low latency video streaming. These protocols minimize packet overhead and processing delays, resulting in reduced latency and improved responsiveness.

- Chunked Transfer Encoding: Chunked transfer encoding breaks video content into smaller chunks or packets for transmission over the network. This allows the client device to begin playback as soon as the first chunk is received, reducing overall latency and improving streaming performance.

- Real-Time Transport Protocol (RTP): RTP is a standardized protocol for delivering real-time multimedia content over IP networks. RTP supports low-latency live streaming by minimizing packetization delay, jitter buffering, and processing overhead.

- WebRTC: WebRTC is a free, open-source project that enables real-time communication capabilities in web browsers and mobile applications. WebRTC uses UDP-based transport and peer-to-peer connections to achieve low-latency video streaming without the need for plugins or additional software.

- HTTP/2 and QUIC: HTTP/2 and QUIC are modern transport protocols that optimize data transmission and reduce latency for web-based applications. These protocols support multiplexing, header compression, and server push techniques to improve streaming performance and reduce latency.

To Conclude

Undoubtedly, the above-mentioned technologies enable broadcasters to deliver high-quality, uninterrupted streaming experiences while adapting to varying network conditions and viewer preferences. By analyzing performance metrics, optimizing video delivery, and minimizing latency, broadcasters can enhance viewer engagement, improve user satisfaction, and differentiate themselves in a competitive market.

Muvi Live SDK offers a comprehensive solution for integrating live broadcasting capabilities into applications or building custom live streaming apps. Designed for developers, Muvi Live SDK provides seamless live streaming, faster time-to-market, and transparent pricing, making it an ideal choice for businesses looking to leverage live video streaming as part of their digital strategy. With Muvi Live SDK, developers can unlock the full potential of live streaming technology and deliver immersive, interactive experiences to their audiences across any platform.

Start for free today to explore more about Muvi Live and its powerful SDKs.

Add your comment