Streaming video requires the working of various technologies in unison. One aspect of this process involves knowing the various streaming protocols responsible for delivering both live streams and VoD to your viewers. As more and more streaming solution providers, broadcasters, and CDN providers prepare for a future filled with the widespread use of live streaming, the need for exploring more efficient protocols as an individual content producer has never been greater. This blog would focus on comparing SRT, an emerging streaming protocol with HLS and MPEG-DASH– the two most popular streaming standards.

What is SRT?

Initially developed by Haivision Systems Inc., SRT is an open-source video transport protocol and technology stack built for optimizing streaming performance across unreliable networks with secure streams. Based on UDP, SRT makes it possible to transfer any data type, however, it is particularly optimized for audio/video streaming.

You see, what happens while streaming is, video/audio packets sent between two points experience a host of transport obstacles like bandwidth fluctuations and packet loss. As a result, video quality at the endpoint gets delivered at a lower quality than what it originally was. The goal of SRT implementation is to solve that issue.

By adapting to real-time network conditions, SRT optimizes video transport from one end to the other over unpredictable networks and minimizes packet loss, leading to a better QoE. It delivers high-quality video and audio with low latency. Not only that, with easy firewall traversal, SRT makes it possible to bring the best quality live video over the worst networks.

Benefits of SRT

- Designed to protect against jitter, packet loss, and bandwidth fluctuations due to network congestion, SRT gives you high-quality video and audio.

- Despite dealing with network challenges, video and audio are delivered with low latency with combined advantages of TCP/IP delivery and the speed of UDP.

- Industry-standard AES 128/256-bit encryption ensures secure end-to-end content transmission over the internet, including simplified firewall traversal.

- SRT is a royalty-free, next-generation open-source protocol that leads to cost-effective, interoperable, and future-proofed solutions.

Where is SRT Supported?

Since SRT is still in the early stages of development, full-fledged implementation is still a work in progress. Most of the major live streaming platforms haven’t yet adopted SRT into their systems, which means that they can’t be used as endpoints. However, since it is open source and royalty-free, we expect more industry developers to integrate SRT into their systems in the coming months.

Application of SRT

SRT (Secure Reliable Transport) is an open-source video streaming protocol that is specifically designed for secure and reliable transmission of video over unreliable networks, such as the internet. SRT has gained significant popularity in the video streaming industry due to its low-latency, error correction, and congestion control features. Here are some key applications of SRT in video streaming:

- Live Video Contribution: SRT is commonly used for live video contribution from remote locations to a central broadcast facility or content distribution network. Its ability to handle varying network conditions, including high packet loss and fluctuating bandwidth, makes it ideal for transmitting live video over the internet.

- Remote Production: In remote production scenarios, where video feeds are captured from different locations and combined in real-time at a central production facility, SRT ensures that the video streams arrive with minimal delay and without degradation in quality.

- Point-to-Point Video Delivery: SRT is often used for point-to-point video delivery, where video content needs to be securely transmitted from one location to another over the internet. This application is prevalent in scenarios such as video conferencing, remote interviews, and live event coverage.

- Video Contribution over Public Internet: SRT is widely used for video contribution over the public internet, allowing content creators, broadcasters, and streaming platforms to receive video feeds from remote sources without the need for expensive dedicated networks.

- Adaptive Bitrate Streaming: SRT supports adaptive bitrate streaming, allowing the protocol to adjust the video quality in real-time based on network conditions. This feature ensures a smooth viewing experience for end-users, even with varying internet speeds.

- Low-Latency Applications: SRT is favored in applications that require low-latency streaming, such as online gaming, real-time video monitoring, and interactive video applications.

- Content Distribution Networks (CDNs): SRT can be integrated with CDNs to efficiently distribute video content to end-users from multiple edge servers. By leveraging SRT’s congestion control and error recovery mechanisms, CDNs can provide a reliable video streaming experience.

- Encrypted Streaming: SRT supports end-to-end encryption, ensuring that video content remains secure and protected from unauthorized access during transmission.

- Multi-Platform Support: SRT is designed to work across different operating systems and devices, making it compatible with a wide range of software and hardware solutions used in the video streaming ecosystem.

How does the SRT Streaming protocol work?

SRT (Secure Reliable Transport) is an open-source streaming protocol designed for transmitting high-quality video, audio, and data over unreliable or unpredictable networks, such as the internet. It was developed to address the challenges of streaming media over long-distance and unreliable connections, offering low latency and high reliability. SRT is widely used in various industries, including broadcasting, video conferencing, and live streaming applications. Here’s an overview of how SRT works:

Connection Establishment and Negotiation:

The SRT protocol uses the UDP (User Datagram Protocol) as its transport layer by default, but it can also work over TCP (Transmission Control Protocol) as an option. When a connection is established, both the sender and receiver negotiate the parameters for the transmission, such as the desired latency, bit rate, and other network-specific settings.

Packet Encryption and Authentication:

SRT supports encryption and authentication to ensure data security during transmission. It utilizes the Secure Sockets Layer (SSL) protocol to encrypt data before sending it over the network, protecting it from potential eavesdropping and tampering.

Packet Loss Recovery and Error Correction:

One of the key features of SRT is its ability to handle packet loss and network jitter effectively. It employs a combination of error correction techniques, forward error correction (FEC), and retransmission to recover lost packets and maintain stream reliability. This ensures that even in challenging network conditions, the media stream remains smooth and continuous.

Latency Control:

SRT is designed to achieve low latency streaming, making it suitable for real-time applications. The protocol allows users to control the latency by adjusting settings like buffer size, transmission window, and retransmission delay. This flexibility enables users to optimize the trade-off between latency and reliability based on their specific use case.

Bandwidth Adaptation:

SRT dynamically adjusts the bit rate of the media stream based on the available network bandwidth. It can adapt to fluctuating network conditions, allowing the stream to continue even in situations with varying levels of available bandwidth.

Firewall and NAT Traversal:

SRT incorporates NAT (Network Address Translation) traversal techniques, making it easier to establish connections between devices behind firewalls and routers. This feature simplifies the process of setting up and managing streams across different network topologies.

Sender and Receiver Statistics:

SRT provides valuable statistics and feedback to both the sender and receiver, allowing them to monitor the health of the stream and adjust as needed. This helps diagnose issues and optimize the streaming experience.

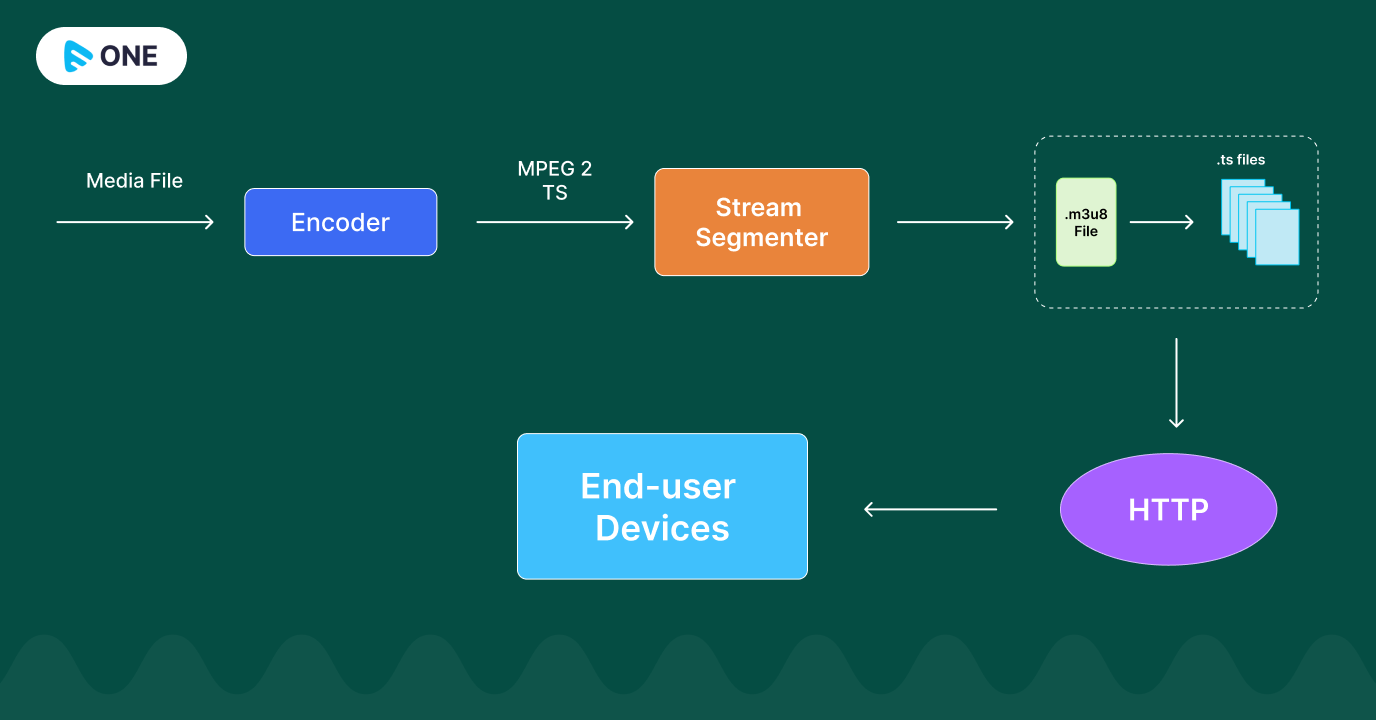

What is HLS?

Developed by Apple, HLS is a protocol for streaming live video content over the internet. HLS is short for HTTP Live Streaming. HLS is an adaptive, HTTP-based streaming protocol that sends video and audio content over the network in small, TCP-based media chunks that get reassembled during playback.

Initially, HLS was supported only by iOS. However, HLS has now become a proprietary format, and almost every device supports it. That means a stream delivered via HLS share will guarantee playback on the majority of devices — thereby expanding your audience. All Google Chrome browsers, as well as Android, Linux, Microsoft, and macOS devices can play streams delivered using HLS.

As the name suggests, HLS delivers video content via standard HTTP web servers. This means that you don’t have to integrate any special infrastructure to deliver HLS content.

Benefits of HLS

- One of the best advantages of HLS is adaptive bitrate streaming– different versions of the stream are sent at different resolutions and bitrates, allowing the viewers to choose the quality of stream during playback.

- High-quality video and audio delivery across poor-quality networks where demand for low latency is not required.

- Multiple audio track support for things like multi-language streams.

- Unlike other streaming formats, HLS is compatible with a wide range of devices and firewalls.

- HLS supports metadata and other enhanced features.

- Maximum playback support including iOS, Android, Linux, Microsoft and macOS devices across web browsers like Chrome, Safari, Firefox, and Edge

- DRM support

Application of HLS

HTTP Live Streaming (HLS) is a popular video streaming protocol developed by Apple. It has become widely adopted and is used for various applications in video streaming due to its adaptive streaming capabilities and compatibility with multiple devices and platforms. Here are some key applications of HLS in video streaming:

- Live Streaming: HLS is extensively used for live streaming events, sports, concerts, conferences, and other real-time content.

- Video-on-Demand (VOD): HLS is also utilized for delivering video-on-demand content. Video files are pre-segmented into small parts and stored on a server.

- Cross-Platform Compatibility: HLS is supported on a wide range of devices and platforms, including iOS devices (iPhone, iPad), macOS, Android devices, Windows PCs, smart TVs, and web browsers.

- Adaptive Bitrate Streaming: HLS dynamically adjusts the quality of the video stream based on the viewer’s network conditions. This ensures that viewers with varying internet speeds can enjoy smooth playback without buffering or interruptions.

- Content Delivery Networks (CDNs): CDNs are widely used to optimize content delivery and reduce latency. HLS is often integrated with CDNs to efficiently deliver video segments to end-users from geographically distributed servers.

- Content Protection: HLS supports encryption and DRM (Digital Rights Management) technologies, allowing content providers to protect their videos from unauthorized access and piracy.

- Server-Side Ad Insertion: HLS can be used for server-side ad insertion, allowing targeted ads to be inserted into the video stream without requiring client-side ad calls.

- Analytics and Monitoring: HLS can be integrated with analytics tools to gather valuable data on user engagement, viewership patterns, and other metrics.

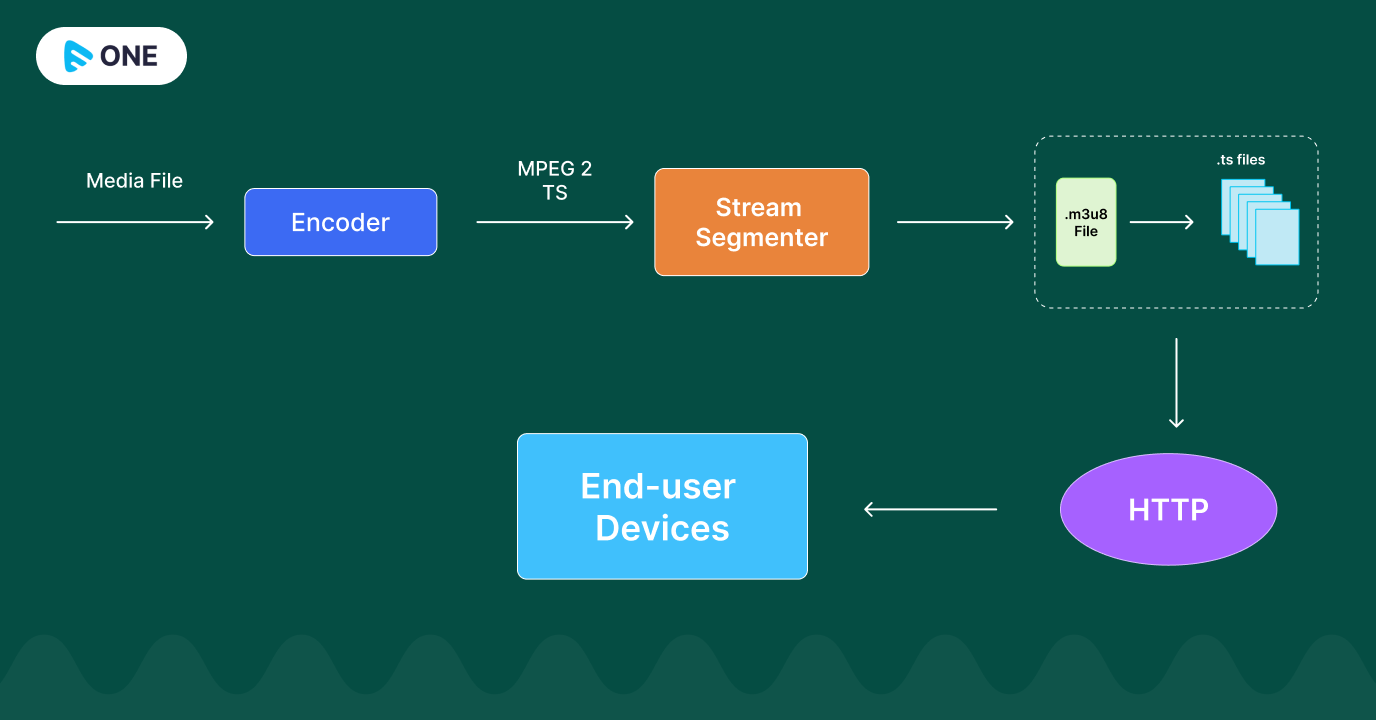

How does the HLS Streaming protocol work?

HTTP Live Streaming (HLS) is a popular streaming protocol developed by Apple for delivering live and on-demand multimedia content over the internet. It is widely used for streaming video and audio content to various devices, including smartphones, tablets, computers, and smart TVs. HLS works by breaking the content into small, manageable segments and using HTTP to deliver these segments to the client devices. Here’s an overview of how HLS streaming works:

Content Preparation:

The media content (video/audio) is first encoded into multiple versions at different bit rates and resolutions to accommodate various network conditions and device capabilities. These different versions are known as “variants” or “renditions.” Each variant is divided into smaller segments, typically lasting 2 to 10 seconds, but this duration can be adjusted based on the use case.

Manifest File Generation:

The server creates a manifest file, known as the “m3u8” playlist, which contains references to the available variants and their corresponding segment URLs. The manifest file is a plain text file in the HLS format and serves as the entry point for the client to access the content. It provides information about the available bit rates, resolutions, and segment URLs for each variant.

Content Delivery:

When a client (e.g., a web browser or media player) requests to play a stream, it first fetches the manifest file over HTTP. The manifest file contains the URLs to the media segments for each variant, allowing the client to choose the most suitable variant based on factors like network bandwidth and device capabilities.

Adaptive Bitrate Streaming:

HLS utilizes adaptive bitrate streaming to ensure smooth playback regardless of varying network conditions. The client selects an initial variant to play based on its capabilities and network conditions. As the playback progresses, the client continuously monitors the network and switches to a different variant if the available bandwidth changes. This way, the client can adjust the video quality in real-time, providing a better viewing experience.

Segment Download and Playback:

Once the client selects a variant, it starts downloading the media segments in sequence over HTTP. Each segment is a standalone file, so even if a connection is interrupted, the client can resume playback easily. As segments are fetched, they are played in order, resulting in a continuous playback experience.

Endless Streaming:

HLS is designed to support continuous streaming for both live and on-demand content. For live streaming, the media segments are generated in real-time and appended to the manifest file. This allows viewers to join the live stream at any time and receive the most recent segments.

What is MPEG-DASH?

MPEG-DASH is the latest and by far, the best competitor of HLS. Created by Moving Picture Experts Group between 2009 and 2012, it uses standard HTTP web servers like HLS. DASH is short for Dynamic Adaptive Streaming over HTTP that means that like HLS, it’s an adaptive bitrate protocol.

MPEG-DASH is codec and resolution agnostic, which means MPEG-DASH can stream video and audio of any format (H.264, H.265, AAC, etc.) and supports resolutions up to 4K. Otherwise, MPEG-DASH functions much the same as HLS. to know more about HLS & MPEG-DASH, go through our blog on HLS vs MPEG DASH: Which Streaming Protocol Should You Choose?

Benefits of MPEG-DASH

- DASH is codec agnostic and supports almost every video codec available including H.264, H.265/HEVC, VP9/10 and WebM

- Supports all kinds of audio codecs including AAC and MP3

- Like HLS, MPEG-DASH supports adaptive bitrate streaming allowing you to detect your internet speed and deliver the most compatible video resolution at the given moment.

- Like HLS, DASH is also capable of high-quality video and audio delivery across poor-quality networks.

- DRM support

Application of MPEG-DASH

- Video Streaming Services: MPEG-DASH is commonly used by video streaming platforms, such as YouTube, Netflix, Amazon Prime Video, and others. It allows these services to deliver high-quality video content to users while adjusting the streaming quality to match the user’s network connection and device capabilities.

- Live Streaming: MPEG-DASH is also employed for live streaming events, such as sports, concerts, conferences, and more. It ensures smooth delivery of live video content, adapting to fluctuations in network conditions to provide the best viewing experience to users.

- Over-the-Top (OTT) Services: OTT services deliver video content over the internet, bypassing traditional cable or satellite providers. MPEG-DASH is a popular choice for OTT providers due to its adaptive streaming capabilities, ensuring uninterrupted playback and reducing buffering.

- Video on Demand (VOD): With MPEG-DASH, video-on-demand platforms can efficiently deliver on-demand content to users, adjusting the streaming quality to maintain a smooth viewing experience.

- Virtual Reality (VR) and 360° Video: MPEG-DASH is used to stream VR and 360° videos, providing adaptive quality based on the user’s device and network conditions, enhancing the immersive experience.

- Digital Signage: In the context of digital signage applications, MPEG-DASH allows for flexible and efficient content delivery to multiple displays across various locations.

- Cloud Gaming: Cloud gaming services utilize MPEG-DASH to stream high-quality game content to users’ devices while dynamically adjusting the resolution and bitrate to maintain low-latency gaming experiences.

- Online Learning: Educational platforms that offer video-based courses can use MPEG-DASH to ensure seamless streaming for students, regardless of their network connection.

- Surveillance and Security: MPEG-DASH can be applied to real-time video surveillance systems, enabling the efficient and adaptive streaming of surveillance footage to remote monitoring stations.

- IoT Applications: In the context of the Internet of Things (IoT), MPEG-DASH can be used to stream multimedia content to connected devices, like smart TVs, home automation systems, and digital signage.

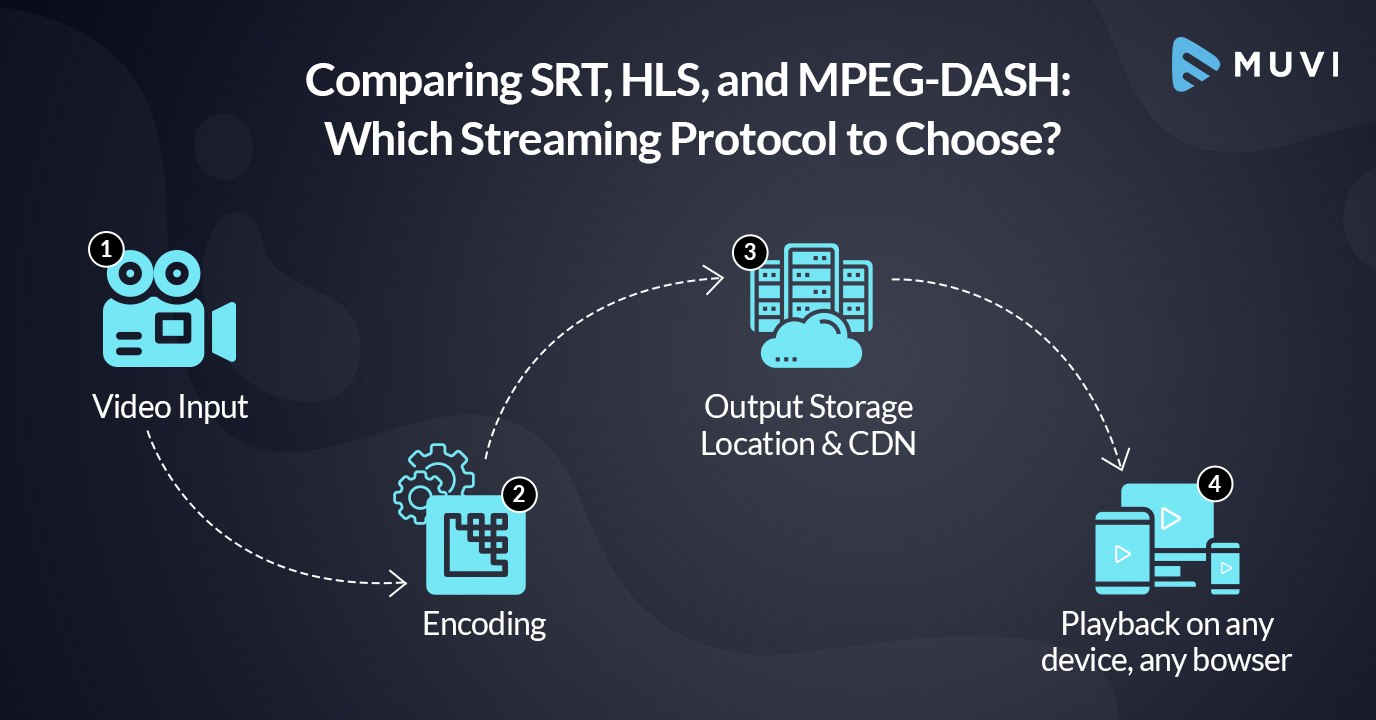

How does the MPEG-DASH Streaming protocol work?

MPEG-DASH (Dynamic Adaptive Streaming over HTTP) is a streaming protocol that enables efficient video delivery over the internet. It works by dividing the video content into small segments and dynamically adapting the quality of the stream based on the user’s network conditions and device capabilities. Here’s a step-by-step explanation of how MPEG-DASH works:

Content Preparation:

The video content is encoded into various bitrate representations or quality levels, typically using H.264 or H.265 video codecs and AAC audio. Each representation is divided into small segments, usually 2-10 seconds in duration.

Media Presentation Description (MPD):

The server generates a Media Presentation Description (MPD) file, which is an XML manifest that contains information about the available representations, segment URLs, and other metadata required for playback.

Client Request:

The client, such as a web browser or streaming application, initiates a streaming session by sending an HTTP request to the server, asking for the MPD file.

Adaptation Set and Representation Selection:

Upon receiving the MPD, the client parses it and identifies the available Adaptation Sets, each representing a set of representations with different qualities (bitrates and resolutions). The client then selects the appropriate representation based on factors like available bandwidth, device capabilities, and user preferences.

Segment Request and Download:

The client begins downloading video segments from the server using HTTP GET requests. Initially, the client may download segments of lower quality to start playback quickly and to ensure a smooth start.

Dynamic Adaptation:

As the video playback progresses, the client continuously monitors the network conditions. If the network bandwidth improves, the client may request higher-quality segments to enhance the viewing experience. Conversely, if the network conditions deteriorate, the client may request lower-quality segments to avoid buffering.

Seamless Switching:

The client can smoothly switch between representations during playback. This process is usually achieved by buffering a few segments ahead and behind the current playback point to ensure a seamless transition.

End of Streaming:

The streaming session continues until the user stops playback or exits the application. The client will stop requesting segments, and the streaming session is terminated.

Which Streaming Protocol is the Best for you?

Streaming protocols perform differently in different areas, and one should carefully analyze them before narrowing down upon one. Some of the parameters that decide the performance of streaming protocols are as follows :

- Scalability

- Latency

- Playback support

- Support for adaptive bitrate streaming

- Proprietary or open-source

- Codec requirements

- First-mile contribution vs. last-mile delivery

Prioritizing the above parameters helps you to narrow down on what’s best for you.

While SRT is a great bet for first-mile contribution, both HLS and DASH win hands down when it comes to playback. Better playback compatibility leads to a wider outreach, which leads to more engagement.

If latency or poor network conditions aren’t an issue, then HLS or MPEG-DASH beats out SRT. Both DASH and HLS are ABR enabled which helps in delivering the best possible video quality to viewers with different network conditions. Also, DASH and HLS are more straightforward to set up than SRT.

If you compare DASH and HLS, the weighing scale of compatibility would tip towards HLS. Here’s why- iOS users represent 25.26% in the global mobile operating system market share. Most of these users can’t play MPEG-DASH video streams unless they use third-party browsers.

Muvi supports RTMP and HLS feeds for your Live Streaming service. You just have to enter your live feed URL in the backend and Muvi’s secure Online Video Player will embed the feed for immediate playback to end-users.

Try our 14-day FREE trial, now!

FAQs

Which is More Widely Supported?

HLS and MPEG-DASH were more established and widely supported, while SRT was gaining traction and seeing increased adoption in specific use cases.

Which Offers Higher-Quality Streaming?

All three protocols (SRT, HLS, and MPEG-DASH) can offer high-quality streaming experiences when implemented appropriately and with the right video encoding settings. The adaptive bitrate streaming capabilities of HLS and MPEG-DASH contribute to smoother playback by adjusting the quality to match network conditions.

Which Protocol is More Reliable?

SRT is designed explicitly to address challenges related to unreliable networks and is likely the most reliable choice among the three protocols. It provides error recovery, retransmission, and other mechanisms to maintain a stable video stream even under adverse network conditions. HLS and MPEG-DASH, on the other hand, are more focused on adaptive bitrate streaming and are generally reliable in typical network scenarios, but they may not offer the same level of resilience as SRT for particularly challenging network conditions.

Which Protocol Should You Use?

The best protocol for your use case depends on factors such as the type of content you are delivering, the target audience and their devices, the level of reliability required, and the network conditions you expect to encounter. It’s essential to assess your specific streaming needs and conduct thorough testing to determine which protocol aligns best with your requirements.

Which is better: low latency HLS or DASH?

Both low latency HLS and low latency DASH have their advantages and are capable of reducing latency for live streaming. The decision on which is “better” depends on your specific use case, platform support requirements, existing infrastructure, and implementation preferences. Evaluate your streaming needs and conduct tests to determine which protocol aligns best with your desired latency and viewing experience.

Add your comment